Differences between Accuracy and Precision

Contents

Comparison Article[edit]

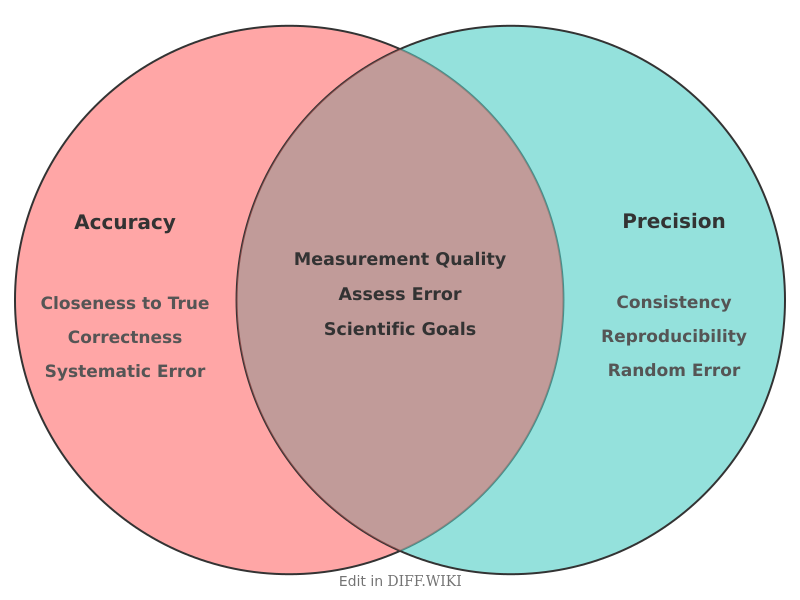

In the fields of science, engineering, and statistics, **accuracy** is the closeness of a measurement to a true or accepted value, while **precision** is the closeness of multiple measurements to each other.[1][2] The two terms are not interchangeable; it is possible for a set of measurements to be precise but not accurate, or accurate but not precise.[1] High-quality measurements are both accurate and precise.[3][4]

A common analogy used to explain the difference is an archer shooting at a target.[1] If the archer's arrows strike close to the bullseye, they are considered accurate. If the arrows are grouped tightly together, they are considered precise. An archer can be precise by grouping all arrows in the same spot, even if that spot is far from the bullseye (precise, but not accurate).[1][4]

Comparison Table[edit]

| Category | Accuracy | Precision |

|---|---|---|

| Definition | Closeness of a measurement to the true value.[4] | Closeness of multiple measurements to one another.[5] |

| Relates to | The correct, known, or accepted value. | The variation among repeated measurements.[5] |

| Measurement Goal | To be correct and close to the true value. | To be consistent and reproducible.[4] |

| Type of Error | Primarily affected by systematic errors. | Primarily affected by random errors. |

| Assessment | Can sometimes be determined with a single measurement.[5] | Requires multiple measurements to evaluate.[5] |

| Target Analogy | Arrows are close to the bullseye.[1] | Arrows are close to each other.[1] |

Relationship to measurement error[edit]

The distinction between accuracy and precision is linked to different types of measurement error.

Systematic errors are consistent, repeatable errors that cause measurements to be off by the same amount. A miscalibrated scale that always reads five grams high is an example of a systematic error. Such errors reduce the accuracy of a measurement but do not necessarily affect its precision.

Random errors are unpredictable fluctuations in measurements. These can be caused by limitations of the measuring instrument or slight variations in experimental procedure. Random errors reduce precision, causing a spread of results around an average value. Taking the average of many measurements can reduce the effect of random error.

Terminology in standards[edit]

Official standards, such as ISO 5725 from the International Organization for Standardization, provide formal definitions for measurement quality. In this context, the term "accuracy" is used to describe the overall correctness of a result and is composed of two components:

- Trueness: The closeness of the average of a large set of measurements to the accepted reference value. This corresponds to the common definition of accuracy and is affected by systematic errors.[2]

- Precision: The closeness of agreement among a set of results. This is affected by random errors.[2]

According to this standard, a measurement is considered accurate only if it possesses both trueness and precision.[2]

References[edit]

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 Cite error: Invalid

<ref>tag; no text was provided for refs namedref1 - ↑ 2.0 2.1 2.2 2.3 Cite error: Invalid

<ref>tag; no text was provided for refs namedref2 - ↑ Cite error: Invalid

<ref>tag; no text was provided for refs namedref3 - ↑ 4.0 4.1 4.2 4.3 Cite error: Invalid

<ref>tag; no text was provided for refs namedref4 - ↑ 5.0 5.1 5.2 5.3 Cite error: Invalid

<ref>tag; no text was provided for refs namedref5